When Digital Reality Shifts: AI-Generated Videos, Political Messaging, and Public Trust

Across the United States, large crowds recently gathered under the banner of the “No Kings” protests—nationwide demonstrations centered on concerns about expanding presidential authority and the perceived weakening of democratic checks and balances. Yet what truly set this moment apart was how swiftly it collided with the growing influence of artificial intelligence. Within hours, a digitally altered video shared by former President Donald Trump turned into a national talking point, underscoring how technology now shapes both politics and perception.

A Movement for Accountability

The “No Kings” protests were held in more than two thousand cities, from New York and Washington D.C. to Los Angeles and Miami. Participants carried signs reading “Democracy, Not Monarchy” and “The Constitution Is Not Optional.” The message, according to organizers, was simple: presidential power should remain accountable to the people.

Several high-profile lawmakers and civic leaders joined marchers across multiple states, emphasizing that the rallies represented not just political dissent but an affirmation of democratic principles. “We will respect the rule of law, stand up for our rights, and protect our democracy through peaceful protest,” one speaker declared. The demonstrations remained orderly, marked by chants, speeches, and music rather than confrontation.

An AI Video Sparks Debate

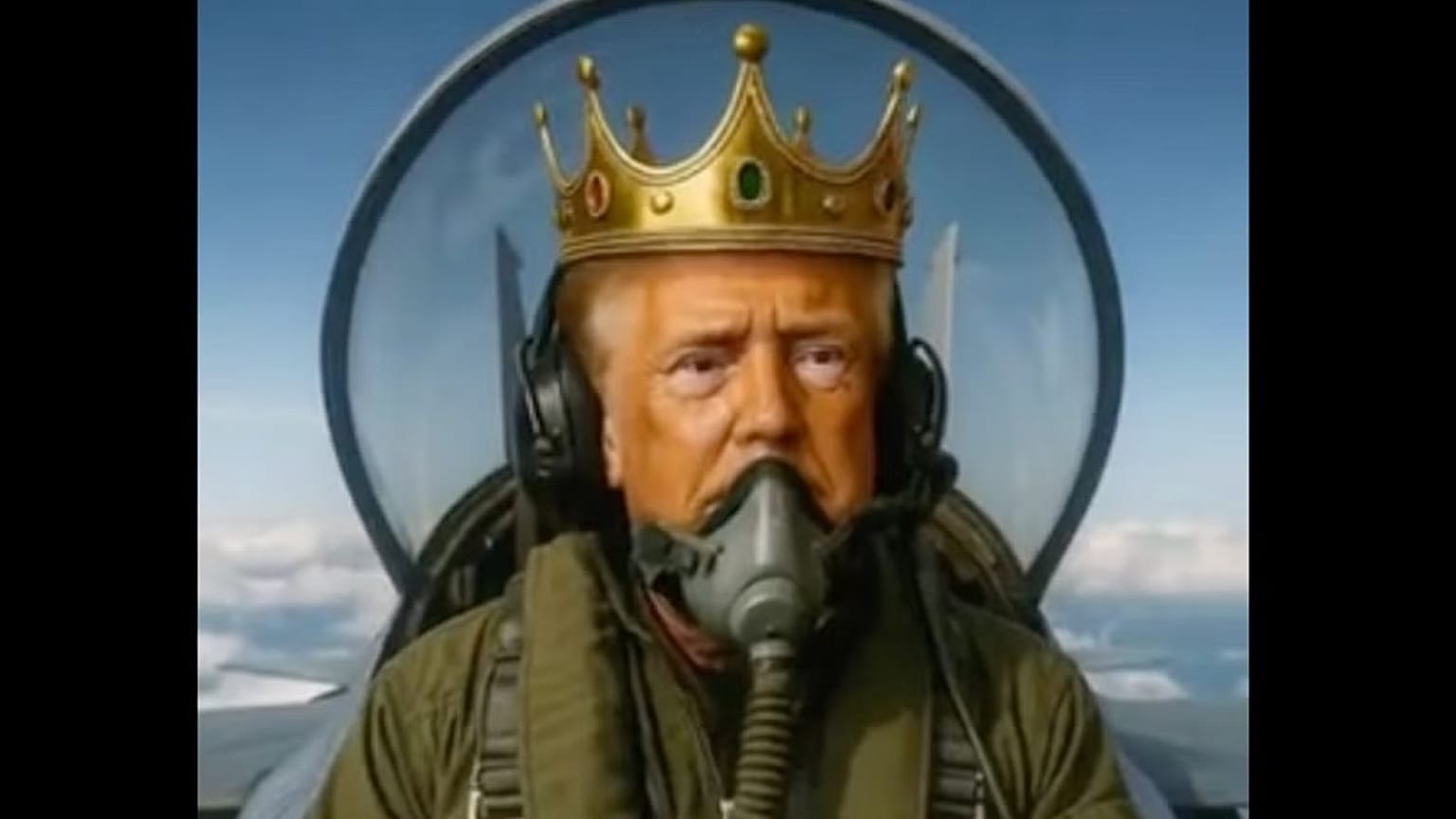

Soon after the protests began trending on social media, former President Trump posted a digitally fabricated video that instantly drew attention. The short clip, apparently generated with artificial-intelligence tools, portrayed a stylized version of Trump flying a fighter jet labeled King Trump over a city skyline reminiscent of New York while wearing a crown. It was set to a rock soundtrack and edited with cinematic flair.

The video was unmistakably artificial, yet its symbolism—and its timing—provoked a wave of discussion. Critics argued that sharing such imagery during mass protests about authoritarianism trivialized legitimate public concerns. Supporters dismissed the controversy as humor, claiming it was satire aimed at exaggerating political opponents’ criticisms. Regardless of interpretation, the episode demonstrated how synthetic media can instantly redirect national attention.

The Rise of Synthetic Media

AI-generated imagery, often called synthetic media or deepfakes, uses machine-learning algorithms to mimic realistic human features and settings. Once limited to entertainment, it has become a powerful instrument in political communication. Researchers note that such technology allows anyone—with minimal technical skill—to create convincing visual fabrications that blur the line between parody and misinformation.

In this case, the imagery drew widespread online engagement. Millions viewed and shared the clip, sometimes without realizing it was computer-generated. That confusion revealed a critical weakness in today’s digital environment: the inability of audiences to easily distinguish between authentic and manipulated content.

Why It Matters

Experts warn that the growing accessibility of generative AI could erode trust in traditional media and political institutions. When synthetic videos are shared by high-visibility figures, the effects extend far beyond humor—they can normalize altered imagery as a form of political messaging. Even when viewers understand that a video is artificial, the visual impact can still influence emotions and reinforce pre-existing biases.

The risk is twofold. First, repeated exposure to fabricated material may lead citizens to doubt legitimate footage, weakening the foundation of shared reality. Second, those who believe synthetic content to be authentic may form opinions based on misinformation. Both outcomes undermine democratic dialogue, which depends on verifiable truth.

Public Reaction and Political Response

Public reaction to the AI video reflected the country’s broader polarization. Many viewers expressed disbelief that such content would come from a former president, calling it unpresidential. Others argued that it was simply a creative form of political expression. Social-media platforms filled with memes, commentary, and fact-checking threads, while television networks debated whether airing the clip amplified its message or served the public interest by exposing its artificiality.

Meanwhile, Vice President JD Vance shared a separate AI-edited image depicting political rivals in a symbolic royal court—further fueling debate about taste, tone, and responsibility in digital communication. Supporters described the imagery as satire; opponents saw it as undermining civic respect. The arguments revealed how generative AI now functions as a new rhetorical weapon in public life.

Symbolism and the “King” Narrative

The “king” imagery touched directly on the protests’ theme. To many demonstrators, it appeared to validate their concerns about executive overreach. To Trump’s base, it signaled irony and defiance. This duality is precisely why AI-assisted symbolism is so potent: it allows a single piece of content to convey multiple meanings to different audiences.

Analysts observe that such theatrics mark a shift in political style—from policy-driven messaging to spectacle-driven leadership. Digital showmanship can energize followers but risks turning governance into performance art, where image supersedes accountability.

Media Literacy and the Question of Authenticity

The rapid spread of the AI video highlights an urgent need for media literacy. Many viewers, despite recognizing exaggeration, still debated whether the clip had any real elements. Researchers emphasize that people often rely on visual cues to determine credibility; when those cues can be manufactured by machines, traditional instincts fail.

Education experts propose integrating digital-authenticity lessons into school curricula, helping future voters recognize manipulated visuals. News organizations are developing watermarking systems and forensic teams to authenticate imagery. These efforts aim not to restrict expression but to equip audiences with tools for discernment.

Institutional and Legal Challenges

Governments worldwide are beginning to address synthetic media through legislation and platform guidelines. Some propose mandatory labeling of AI-generated material or penalties for deceptive use in campaigns. Civil-society groups argue that transparency, rather than censorship, offers the best defense—ensuring that creative works are clearly identified while intentionally misleading content faces accountability.

Regulators face a delicate balance: encouraging innovation while protecting public trust. Overly strict rules could stifle legitimate artistic or journalistic use of AI, yet too little oversight invites chaos in information ecosystems already strained by misinformation.

The Role of Traditional Journalism

In this evolving landscape, journalism remains a stabilizing force. Major outlets are experimenting with authenticity protocols—metadata verification, image provenance tracking, and digital-forensics units—to confirm whether visuals are genuine. Many now issue disclaimers when covering synthetic material, clarifying that content has been digitally altered.

Editors stress that their mission is not to ban synthetic media but to contextualize it. By identifying when a clip is digitally created, they help maintain the public’s ability to trust real documentation when it matters most.

Lessons from the “No Kings” Protests

The weekend’s events revealed both the vitality of civic engagement and the fragility of truth in the digital age. Millions demonstrated peacefully for constitutional accountability, while millions more consumed or commented on AI-generated satire about those same protests. The overlap between activism and artificial imagery illustrates a new frontier of democratic tension—where perception competes with reality for legitimacy.

In that sense, the protests and the video together form a case study in twenty-first-century politics: one side mobilizes through physical presence in the streets; the other counters through virtual spectacle online. Both claim to represent “the people,” and both rely on media to amplify their message.

What Comes Next

As AI tools continue to advance, political actors are likely to use them more often—for humor, persuasion, or distraction. Voters will need to adapt by cultivating skepticism without succumbing to cynicism. The challenge is to maintain confidence in authentic information while accepting that satire and simulation are here to stay.

Technology companies, educators, journalists, and policymakers share responsibility for creating frameworks that promote transparency and accountability. Only by combining innovation with ethical standards can society prevent the erosion of factual discourse.

Conclusion: Protecting Reality in an Artificial Age

The “No Kings” demonstrations and the subsequent AI-video controversy reveal how power and technology now converge in shaping public consciousness. Leadership today is communicated not only through speeches or policies but through carefully engineered imagery that travels faster than facts.

In democracies, truth is a common resource. If citizens lose faith in what they see and hear, governance itself becomes unstable. Artificial intelligence, like every transformative technology before it, can either strengthen that trust or corrode it—depending on how responsibly it is used.

The lesson from this episode is clear: transparency and critical thinking must evolve alongside innovation. The ability to question, verify, and understand digital content is no longer optional; it is essential to preserving democracy in an era where pixels can persuade more powerfully than words.

Sources

-

University of Rochester – Video Deepfakes and AI: Meaning, Definition and Technology

-

Brennan Center for Justice – Regulating AI Deepfakes and Synthetic Media in the Political Arena

-

Carnegie Mellon University – Deepfakes and the Ethics of Generative AI

-

Taylor’s University – Deepfakes and Democracy: Navigating the New Reality of AI in Political Communication

-

arXiv – Human Perception of AI-Generated Faces